What is GPT-Engineer?

GPT-Engineer is an open-source tool developed by Anton Osika that transforms project design documents into full-fledged codebases using the GPT-4 language model. Unlike typical code generation tools, GPT-Engineer is designed to engage interactively—asking for clarifications, identifying gaps, and iterating on requirements to produce usable software.

In essence, GPT-Engineer is more than a static code generator—it’s an AI coding assistant capable of:

- Understanding complex project requirements

- Generating modular, well-documented code

- Working across frameworks, use cases, and industries

Getting Started: Setting Up GPT-Engineer

Deploying GPT-Engineer is a straightforward process:

- Clone the GPT-Engineer repository from GitHub

- Create a Python virtual environment

- Install the required dependencies from requirements.txt

- Configure your main.prompt file with a detailed design description

Once configured, GPT-Engineer reads your prompt, asks clarifying questions, and generates code accordingly.

Powered by GPT-4

GPT-Engineer leverages the capabilities of GPT-4, one of the most advanced language models to date. When used in tandem with GPT-4, GPT-Engineer can understand nuanced design prompts, produce high-quality software architecture, and even write documentation.

This makes it especially effective for:

- Drafting boilerplate code

- Creating RESTful APIs

- Developing data models and CLI interfaces

- Structuring test frameworks

Use Case: Creating a Key-Value Database

A compelling example of GPT-Engineer in action is the generation of a simple key-value database using Python and FastAPI.

Prompt Design

Developers can begin by generating a prompt using GPT-4:

“Design a simple key-value database using Python’s standard library and FastAPI. Include a command-line interface and RESTful API for CRUD operations.”

Output

The result includes:

- A Python library module for connecting and querying the database

- A command-line REPL tool

- FastAPI endpoints for remote interactions

- In-memory caching logic using functools.lru_cache

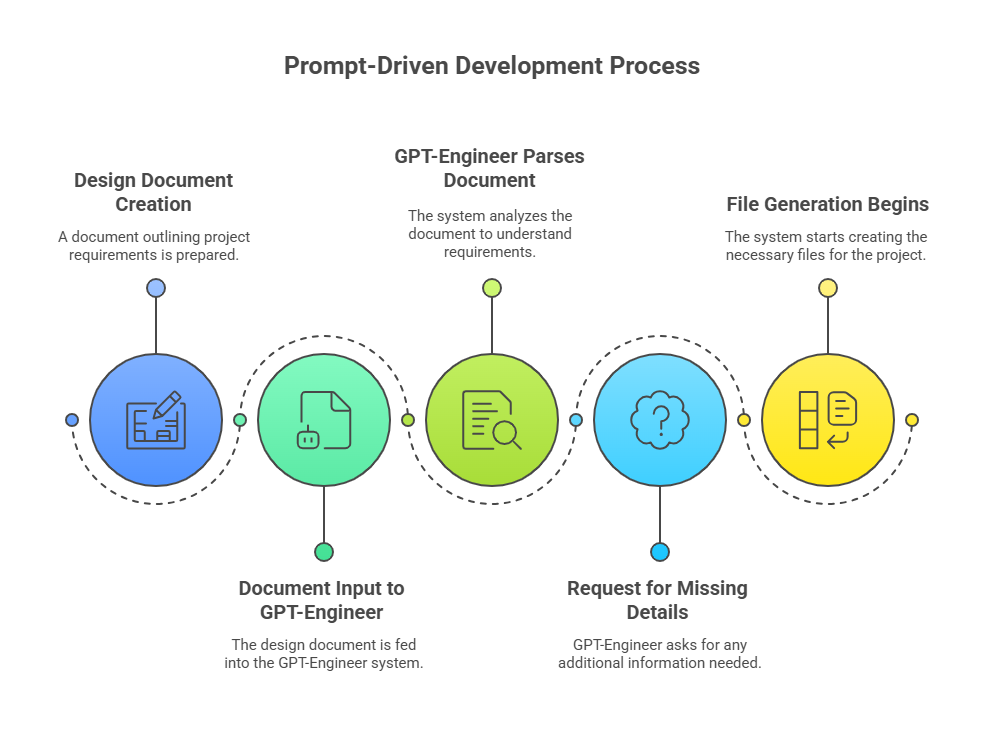

Prompt-Driven Development: How It Works

The primary interface for GPT-Engineer is a design document that feeds requirements into the engine. This document outlines core components, data models, interfaces, and performance expectations.

A high-quality prompt might include:

- Overview of the application

- Description of core modules and interactions

- API design with function signatures

- Performance and caching strategy

- Optional integration tools or UI requirements

GPT-Engineer parses this document, asks for any missing details, and begins generating the necessary files.

Features That Set GPT-Engineer Apart

1. Interactive Clarification

GPT-Engineer actively prompts the user with clarifying questions, just like a junior developer would during sprint planning. This ensures it doesn’t make assumptions about vague or incomplete requirements, leading to more accurate code generation and project alignment from the outset.

2. Modular Code Output

Every codebase generated by GPT-Engineer is structured into clean, modular components. This separation of concerns makes it easier to maintain, test, and extend. Developers can jump into any part of the code without having to decipher a tangled monolith.

3. Full-Stack Capabilities

GPT-Engineer supports frontend, backend, and API generation in a single flow. Whether it’s a Flask backend or a React interface, the engine builds out entire applications cohesively. This flexibility supports varied use cases—from simple tools to multi-tier platforms.

4. Design Consistency

By basing the entire codebase on one cohesive design document, GPT-Engineer ensures consistency in naming conventions, data handling, and module interactions. This uniformity reduces technical debt and fosters team-wide readability and understanding.

5. Reusability and Extensibility

Code generated by GPT-Engineer isn’t throwaway or locked into narrow use cases. Its modular structure, clear documentation, and logic-driven approach make it easy to adapt or scale across new use cases, platforms, and business requirements.

6. Prompt-Driven Refinement

GPT-Engineer responds to updated prompts without requiring a full rebuild. Developers can refine their prompts iteratively and the tool will integrate changes smartly. This makes it ideal for agile workflows where requirements evolve during the build process.

7. Intelligent Defaults

Out of the box, GPT-Engineer assumes best practices for most programming tasks. Whether it’s RESTful routing, data validation, or error handling, the generated code follows patterns that seasoned developers would approve of—reducing onboarding time.

8. Built-In Documentation

The tool not only generates code but includes inline comments and API documentation. This supports onboarding and handoffs, especially for junior developers or teams collaborating across time zones and languages.

9. Test Integration

Where applicable, GPT-Engineer generates unit and integration tests based on the design prompt. It understands common testing frameworks and includes testing logic that aligns with business requirements, improving reliability.

10. Extensible Tooling Ecosystem

GPT-Engineer can be easily paired with CI/CD tools, linters, formatters, and API testers. It’s built to fit into existing dev workflows rather than replacing them, making it ideal for teams that want to scale up without disruption. Codebases produced by GPT-Engineer are not throwaway prototypes—they’re well-organized, modular, and ready for real-world use.

Accelerate your product roadmap with AI-driven development

Let GPT-Engineer and Neuronimbus handle the heavy lifting—so you can focus on innovation.

Talk to us today

Limitations and Workarounds

GPT-Engineer excels in greenfield development but has limitations in refactoring existing code. Its performance tends to drop when tasked with understanding legacy codebases, complex branching logic, or interdependent modules.

For tasks like refactoring or continuous improvement, developers may consider pairing GPT-Engineer with other tools like Aider or the ChatGPT Code Generator plugin.

How Teams Use GPT-Engineer

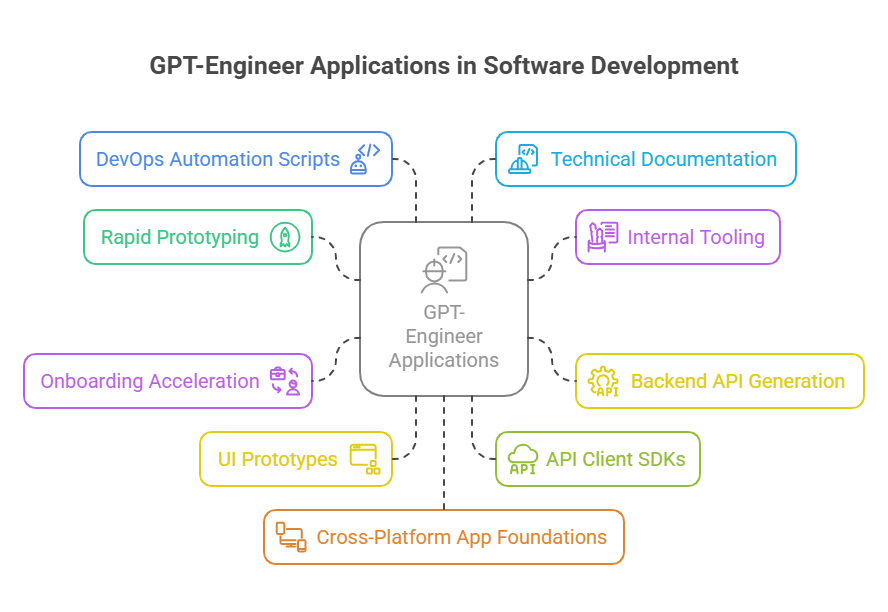

1. Rapid Prototyping

Startups use GPT-Engineer to turn ideas into MVPs within days. Whether it’s a landing page or a microservice, they input design prompts and receive production-ready codebases. This cuts dev time, costs, and allows quicker user feedback for validation.

2. Internal Tooling

Organizations use GPT-Engineer to build internal dashboards, CRUD systems, and automation tools with minimal engineering overhead. It frees up senior developers by offloading repetitive tasks, enabling more focus on complex feature development and business-critical innovations.

3. Backend API Generation

Developers use it to spin up structured REST or GraphQL APIs that align with business logic. GPT-Engineer takes care of models, routes, and validations, ensuring the backend is clean, consistent, and ready for integration with frontend apps or third-party services.

4. Onboarding Acceleration

Engineering teams leverage GPT-Engineer to create starter kits or template projects that help new hires get up to speed. These pre-generated codebases come with standardized practices, reducing ramp-up time and ensuring early contributions are aligned with team standards.

5. UI Prototypes

Designers and product managers use GPT-Engineer in tandem with Figma-to-code tools to bring UI wireframes to life. This enables better stakeholder feedback before involving frontend engineers, ensuring designs are validated early and reduce rework cycles.

6. API Client SDKs

Product teams often need client SDKs to communicate with their APIs. GPT-Engineer can auto-generate these libraries in multiple languages based on prompt-driven API definitions, significantly speeding up integration timelines for partners and external developers.

7. DevOps Automation Scripts

DevOps teams use GPT-Engineer to scaffold CI/CD pipelines, Docker setups, or infrastructure-as-code templates. By converting requirements into shell scripts or YAML configs, it enables repeatable deployments and environment consistency across cloud and hybrid systems.

8. Technical Documentation

Engineering managers and tech writers feed project blueprints into GPT-Engineer to auto-generate technical documentation. This ensures consistent communication between teams and streamlines the creation of architecture overviews, API usage guides, and module references.

9. Cross-Platform App Foundations

Teams use GPT-Engineer to set up the initial structure for cross-platform mobile or desktop applications. It provides the skeleton code across iOS, Android, or web platforms—giving devs a solid base to begin feature development.

10. Research and Experimentation

R&D labs and academic researchers use GPT-Engineer for quick hypothesis testing. It lets them create throwaway but functional prototypes to validate machine learning workflows, simulate data pipelines, or test new development patterns in record time.Bootcamps and universities use GPT-Engineer to demonstrate software design, code generation, and prompt-based development workflows.

Why GPT-Engineer Represents the Future of Development

1. Rapid Development Cycles

GPT-Engineer enables lightning-fast prototyping and production builds. By generating entire codebases from prompt documents, it slashes time-to-market dramatically, helping teams deliver MVPs, test features, or pivot faster without getting bogged down in repetitive coding work.

2. Cost Efficiency

Reducing manual coding tasks minimizes overhead. Small teams can achieve what once required multiple engineers. GPT-Engineer delivers quality output that cuts labor hours, allowing companies to reallocate engineering budgets to innovation and strategic initiatives.

3. Democratization of Development

Prompt engineering opens up coding to a wider audience. Non-technical founders, designers, and analysts can generate functional applications using plain English instructions—bridging the gap between ideation and development.

4. Built-In Best Practices

Code generated by GPT-Engineer reflects industry standards like RESTful routing, error handling, and modular architecture. Developers don’t have to spend time enforcing conventions—GPT-Engineer integrates them by default.

5. Enhanced Collaboration

Cross-functional teams can collaborate more effectively with a shared design document. GPT-Engineer aligns output with business needs, helping teams iterate in sync with fewer miscommunications between product managers and developers.

6. Scalable Architecture

Projects built with GPT-Engineer are designed with modularity in mind. The resulting architecture allows teams to scale their software with ease—whether it’s feature extensions or adapting to growing user demands.

7. Learning and Upskilling

Junior developers benefit by studying GPT-Engineer’s output. The generated code often includes inline comments and documentation, offering a learning aid while accelerating their contribution to real projects.

8. Better Documentation Coverage

Because it auto-generates documentation, GPT-Engineer improves one of development’s weakest links. Every project it outputs includes clear API references, usage examples, and design rationales—making codebases easier to onboard and maintain.

9. Integration Ready

The code GPT-Engineer outputs can be immediately plugged into CI/CD pipelines, containerized, or deployed on cloud platforms. It supports infrastructure-as-code principles, making it highly compatible with modern DevOps workflows.

10. Forward-Compatible with AI Workflows

As LLM-based tools evolve, GPT-Engineer fits naturally into a future where software is co-developed by humans and AI. Its prompt-driven foundation aligns with how large-scale systems will be ideated, developed, and maintained in an AI-first world.

- Speed: Instantly spin up a codebase with robust architecture

- Efficiency: Eliminate boilerplate tasks and accelerate delivery

- Scalability: Modular output that supports real production environments

- Accessibility: Opens software development to non-developers via prompt engineering

How Can Neuronimbus Help?

At Neuronimbus, we understand the massive shift AI tools like GPT-Engineer are bringing to modern development. Our role is to help you integrate GPT-Engineer into your workflow efficiently—whether that means automating boilerplate code, accelerating prototyping, or scaling production systems. From prompt engineering to CI/CD integration, we make sure the AI-generated code aligns with your architecture and business logic. We guide your teams in adopting a hybrid model of AI and human collaboration that boosts output and reduces errors.

Whether you’re a startup with an idea or an enterprise modernizing legacy systems, Neuronimbus offers the strategy, technical implementation, and support to put GPT-Engineer to work for you. Let us help you future-proof your development process, harness the power of AI, and bring smarter, scalable solutions to market—faster..** From design ideation to code generation, it reshapes how we approach development in the AI era.

At Neuronimbus, we help organizations integrate tools like GPT-Engineer into their software lifecycle to increase developer velocity, reduce technical debt, and bring intelligent automation to product development.